May 2023

I get the impression that most people have either forgotten or never heard about Bill Gates’ bold assertion that the Internet wouldn’t come to anything. I guess he’s remembered for other stuff he did (and does!) – more astute business decisions, addressing the issues of the moment. Zuckerberg, on the other hand, thought that the future would be inside his metaverse, and his empire, similar to that of Microsoft, almost seems big enough for him to be able to decide what our future will be like. But it isn’t. So, who should we listen to when it comes to predicting the future? Certainly not me!

I was happily driving back from a long weekend away at the start of the month, feeling good on the open road, and decided to announce to my wife – who was quietly browsing through the news at the time – that all this nonsense about ChatGPT and large language models would, in fact, come to nothing (apart from causing minor damage such as wiping out the translation industry). She responded by reading out an article from El Pais summarising a piece in Nature Neuroscience magazine about a University of Texas research project. The team of investigators claimed to have trained a “language decoder”, with specific test subjects, that was capable of producing a text summarising what the subjects were looking at or thinking when they were shoved into some functional magnetic resonance imaging kit and connected up! I almost had to pull over and start the breathing exercises. Brain-machine interfaces are fundamental to my story! (Okay, Doshi prefers an interface based on symbols rather than words, but … we’re already there in my “future”?) And isn’t this mind-reading?

I convinced myself it was a flash in the pan, and carried on driving, taking the occasional deep breath just in case. And then, a few days later, Geoffrey Hinton, the “Godfather of AI”, resigned from Google because he wanted to be free to warn the world about the possible “end of human civilisation” (as we know it!), which “might well be inevitable”. Damn!

Already depressed, I listened to a podcast with an interview Hinton gave to my favourite tech guru at the Guardian (I’m not pushing the link this time, or you’ll think I get a commission – I’ll just point out that Hinton talked to the Guardian the week before visiting the White House or accepting Elon Musk’s invitation).

How to summarise? It’s basically the usual stuff: deep learning and neural networks – the technology Hinton pioneered and that didn’t really work until the necessary computing power recently became available. And it’s the rate of improvement over the last few months that made him jump ship. His short-term fears seem to involve a vision of the Internet completely swamped by “fake news” (would we really notice the difference?), followed by societal upheaval and then the danger that, in perhaps 5-20 years, “humanity will not be in a position to decide its own future”.

I’m about to finish here, so I’m looking for the closest I can get to a “happy ending”. The first encouraging thought was that we’ve had nuclear weapons for longer than most of us have been alive – and we’re still here. We somehow found a way to “control” the monster we had created. I think a similar approach to “battlefield robots” is probably applicable: they’re not going to suddenly appear on every street corner, poised to destroy us, are they? (On the other hand, the Internet of Things – everything connected and hackable – suddenly sounds like a really dodgy idea.)

The “millennium bug” was perhaps a more trivial case of identifying a danger and taking action suitably far in advance (I suspect the company I worked for at the time pocketed some handy cash to address the issue even though we used an archaic operating system that worked off internal dates – where zero was a date sometime in the 1970s! – and we had already trawled the software for when the internal date changed from a number that had four digits to one of five!), but it’s true that planes didn’t fall out of the sky, perhaps because we overreacted.

And, finally, self-driving cars are great ninety-nine percent of the time, but that last one percent – the situations in which they are a complete disaster – is really hard to sort out. What if AI is the same? What if, having read everything that has ever been written and shared it instantly between them, the AIs of the future still don’t know enough to use all that knowledge to make us believe whatever they want (as Hinton claimed they would be able to)? And surely without that last one percent they will never achieve the “agency” required to destroy us intentionally, let alone the “consciousness” the aficionados used to talk so much about. Maybe they’ll just talk and talk and talk. A bit like me, really.

Kindle deal announcement – and less rambling – next month!

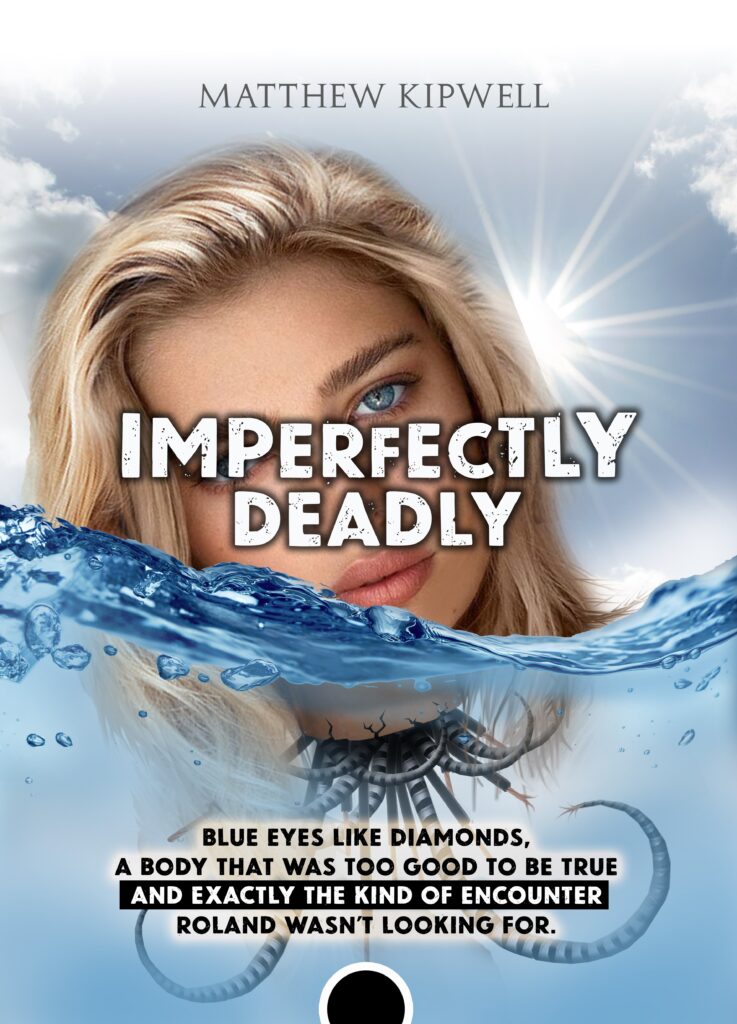

PS – Can’t resist signing off with my own beautiful/scary vision of the future:

‘Let’s not patronise each other. It’s such a waste of time!’